Posts Tagged ‘security’

Privacy and Security: Less Rhetoric and More Product

Tim Cook’s recent remarks on privacy described as “blistering” or an “epic subtweet” amplified a discussion about the web and privacy. Given the polarizing framing of this topic, collectively as an industry (and beyond) we have been less than stellar at discussing these topics. We’ve also done a poor job at proposing broad initiatives to address concerns raised in the discussion.

Tim Cook’s recent remarks on privacy described as “blistering” or an “epic subtweet” amplified a discussion about the web and privacy. Given the polarizing framing of this topic, collectively as an industry (and beyond) we have been less than stellar at discussing these topics. We’ve also done a poor job at proposing broad initiatives to address concerns raised in the discussion.

We seem to be caught in that difficult situation of having defined the problem as requiring an all-or-nothing solution, which is never a good place to be because the reality is more nuanced. Dustin Curtis points out the nuance in this post Privacy vs. User Experience.

Rather than debate extremes that are neither desirable nor technically possible, I want to suggest there are technical problems that can and should be solved, and doing so would make the Internet a better place for people using Internet services and businesses providing services.

“Get Over It”

Way back before there were mobile phones, today’s search engines or social networks, or cloud computing, Sun Computer founder Scott McNealy said, “You have zero privacy anyway. Get over it.” Yikes!

The statement at the time had elements of truth, fear, and absurdity. It was pre-bubble, heck it was the 1990’s still and 1984 was still fresh on our collective psyche. The statement did however foretell a significant change in what was going to happen.

Such a debate is not new. While the scale is different, I recall three major products from reputable companies that introduced me to the absolutes and polarization of the privacy “debate”.

Robert Bork was nominated for the Supreme Court of the US in 1987 (and later failed confirmation in the Senate). One of the moments of the very contentious confirmation was the appearance of the nominee’s personal records from a video rental store (delivered to the press as a hand-written list). This was clearly a dubious act later codified to be illegal. I think for my generation, it was the first experience of how things would change in the digital age of record keeping via computer. At the time there were quite a few connections made to how the FBI maintained files on people, but this was the first time the “incriminating” information came from a benign consumer business.

Lotus Marketplace was a product developed in the late 1980s. It had the gall to collect data sources like US Census data and public phone number listings and put it on CD ROMs for marketing people to use with Lotus 1-2-3 to plan and analyze marketing campaigns. Even worse it had household and zip code level data about the US (all based on sources already in existence and available to businesses. Much debate centered on how one could take this data and potentially “triangulate” it to actually learn something about an individuals. Likely due to the massive outcry, the product was never released to the market. From this early experience we can see the combination of an existing data source and distribution of digital data changes the dynamic of privacy.

Credit card companies became famous for the offers inside your monthly statement in the 1980s—little paper inserts with offers to buy custom return address labels, go on cruises, or secure other financial products. Like confetti they would fall out of the envelope. These were the very definition of “junk mail”. Then the companies began to use your previous purchase history to target these inserts. If you were paying attention then you realized that junk mail started to look less and less junky. This “feature” turned into a fear that credit cards were selling your charging history to random companies. Of course that was not true (in fact such information was closely guarded). The way they worked was the credit card company would offer inserts matching specific target customers and insert them for a fee. Because financial companies were already tightly regulated, the path to today’s Byzantine opt-in/opt-out direct mail policies can be traced to this history.

Fast-forward to today and we know that the services we use amass significant information about how we interact with them. The medical establishment has my medical history available to a constellation of caregivers (and to me) that make delivery of quality care easier and faster. Credit card companies know my charging history and patterns and can alert me to fraud instantly (even if too often incorrectly). Netflix knows all the movies I watch (and even how much of them) and uses that to improve a highly valued recommendation engine. Pizza delivery services know what we order and can save time and effort by using that history (and also offer promotions based on that). Google Maps knows where I travel and when and proactively offers suggestions on when I should depart depending on current traffic. The examples are endless. In fact the benefits of maintaining my history of interaction with a service are immense and a deciding factor in which services I choose to use.

The risk that we have assumed on an individual service is that providers cannot maintain the integrity of their own services. This is a network technology risk as we have seen with Target or Home Depot. It is a human risk as we have seen with breaches like Sony where people set out to arbitrarily harm others. It is a national security risk such as we have seen with the recent attack on the federal government in the US, allegedly orchestrated by a nation-state.

The risk that a company will do “bad” things with the data it has as a result of using a service by all appearances is infinitesimal. Will some features feel creepy to some? Of course that is the case. Some people don’t like having their name and order remembered by the barista (a human form of big data).

The risk that a company will be breached and the data put to uses not intended by the company is not only there, but it is significant. This is a technology problem our industry needs to solve. One thing is clear and it is that the biggest companies are the biggest targets and the largest technology companies are (I would assert) the most savvy and adept at these issues. But the problem is incredibly difficult in a world of nation-states leading some attacks.

I am not a “get over it” person, but rather an engineer and product person that sees the desire of companies to use data to deliver far better services and that desire is leading to immense innovation in how commerce is conducted and the internet is used. At the same time this data is very attractive to bad actors for a variety of reasons and that is a technical challenge our industry will rise to as it has time and time again. This is first and foremost a security problem due to bad actors. The privacy challenges come from what happens with the data when used by good actors in the system and this is a much more nuanced challenge.

I do believe that if you simply want to skip using services that compile data then you should be able to opt-out of services or to simply choose to use alternative services, but there is no obligation for any given service to provide the non-personalized, non-targeted, non-historic version of a service. The market for such services is likely to shrink and that might be unfortunate. The free market is like that. Sometimes something highly valued at a point in time becomes non-economic or scarce as companies compete for a larger market. I don’t have an easy answer for customers that want to use the internet without a trail—I strongly strongly support the services and technologies that allow for that (encryption, tor, etc.). I think the evidence is that this is not where most people will go. Historically, if there is money to be made then businesses will be created to seek that opportunity.

But What About Web Privacy

Why all the kerfuffle over privacy, again? My view is that this is rooted in the experience of the web that is just getting worse and many are frustrated. Security breaches of private information compound this concern and are symbolic of technology challenges on the Internet. Security breaches are unrelated to privacy in the sense that breached systems are not ad-funded user profiles, but wholly orthogonal and essential line of business information. The challenge is that our collective experience on the web is the result of a mountain of technologies built out over the past 20 years in an effort to deliver services to consumers that are paid for by advertising.

The act of delivering services paid for by advertising is not only inherently good and beneficial, but also essential to the amazing spread and growth of internet services. It should be readily apparent that the rise of internet advertising supported services is singularly responsible for the mass scale growth of billion-customer services. That is only good.

It isn’t that all my data is in the cloud waiting to be mined—AT&T, Comcast, Blockbuster, American Express, Nordstrom, Safeway, Amazon, UPS, and more already had a crazy amount of information about me and I would love exactly none of that to be in the hands of a bad actor. Even Apple knows every song I ever bought (if this happens to leak, I am saying now that I bought Barry Manilow Live for a friend’s birthday party), every place I ever used Maps to visit, and all my mail and contacts. Google has much of this too. I know Google is not selling my name and that information to anyone, but like a credit card insert they will match an advertisement for services to “people who visit New York”.

The challenge is that in an effort to improve the revenue yield of services all too often technology solutions available were used, abused, or otherwise misused in ways that degraded the overall experience of the internet for too many. The problem is that web ads are awful experiences and getting worse. We need technical solutions to this 20-year pile of legacy features.

My view is that the horrible experience of browsing the web and seeing those ads that “interfere” with using the web, and fear that this experience will become what we all experience on the pristine world of mobile is at least partially and likely largely responsible for using privacy as the anchor of this debate. In Steve Jobs’ “Thoughts on Flash” he was completely accurate about the problems of the runtime. That runtime was used as the basis of ads. He may or may not have been against ads, but many people were quite frustrated by the technical execution of ads in Flash and so his appeal resonated. It is just few of us could do much about it.

The industry did not stand still. Over the years we have seen browsers add pop-up blocking. While advertisers were angry, people cheered. We’ve seen a dramatic rise in ad-blockers. Yet we still see a constant stream of complaints on the web about “wait 5 seconds to see your story” or user experiences that test even the most savvy gamer when it comes to finding and clicking the close box. But this is the technology choice on the web, not the nature of advertising itself.

Fixing the Web

I was a strong supporter of evolving the browser to support features that allowed consumers to choose how to secure their experience. From popup blockers to Do Not Track I advocated for this type of control. The reason was not because I am against “free” services or want everyone to browser anonymously without footprints. The reason is because the web got so messy that the recourse seemed to be to help people as individuals.

On a personal note, championing features like Do Not Track (DNT) was one of the more educational chapters in my own career. I had never experienced the “slippery slope” defense quite like that—the idea that a feature was just an on-ramp to the apocalypse would not overstate the reaction to such a feature. The argument against DNT was that overnight the free internet would vaporize, which was also the argument against popup blockers or the removal of Flash.

There are three parts of today’s web that are technology problems waiting for solutions. The solutions are either difficult or undesirable but I believe solving them would go a long way towards framing the debate as a choice between “selling your personal information” or “there will be no services on the Internet”.

¶ Ads are awful. First and foremost, today’s ad formats are relatively hostile to consumers using services. We all know that ads want to be noticed. That’s a given. The openness and programmability of HTML5 and browsers created an open season on the technology used in ads and while there is plenty of innovation there are more negatives. Even the biggest and most popular sites can grind a browser to a halt on a powerful desktop PC. With television, for years advertisers tried tricks like raising the volume of an ad in order to get you to notice. This was fixed in the US by government regulation in 2012 with the CALM Act. We need such a movement for the internet. I believe the presence of advertising networks and internet standards bodies already in place (that create standard ad formats) could easily create standards around fly overs, popups, tiny close boxes, interstitial timings, audio and video playback, and so much more. We of course don’t want to stifle innovation, shut off A/B testing, or otherwise become the government but certainly we can create better technology and designs for advertisement. The popularity of browser based ad blockers is not about “privacy” but just about a desire to read more stuff and use more services in some reasonable way, I would assert.

¶ Content responsibility is lacking. One of the biggest places where advertising meets real-world security concerns is when advertisements themselves are vectors for security exploits. The advertising networks of today are well-known repositories for the distribution of zero-day exploits and malware insertion. While one could fault the browsers for not being able to secure against this, one must also fault the ad networks for allowing this content in the front door. One can also fault the sites that host this. As a consumer visiting a site, neither the site nor the ad network act responsibly relative to this type of content. All will do takedowns but the effort to own up to this challenge is not what I think it could be (there’s plenty of hard work, but not enough). Ad formats themselves are part of the challenge. Should ads be allowed the full power of the browser and runtimes? Should we define a maximal set of capabilities that ads can use? There is lots to be done here.

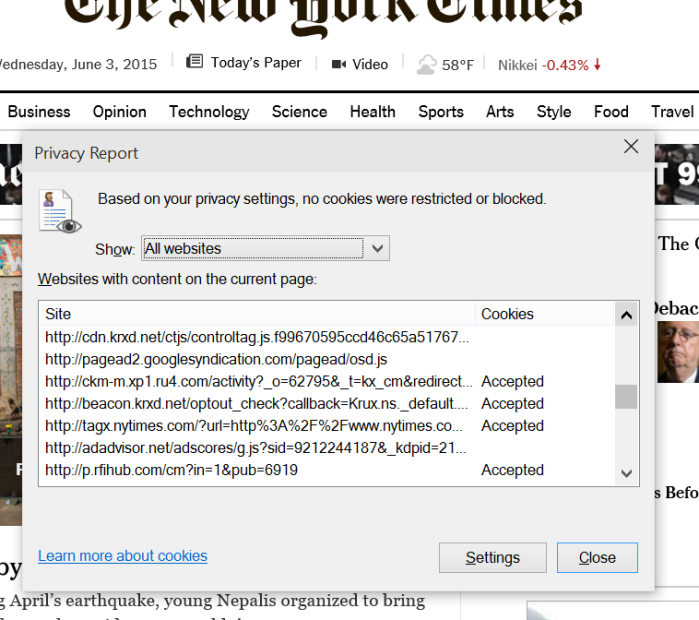

¶ Accountability is non-existent. While we were working on “Do Not Track” one of the things that surprised me the most was the lack of accountability for some core information about people that I believe is a privacy challenge. Some have talked about this as the problem with cookies (again a polarizing way to describe something since I also like not having to sign into services I use all the time on my home PC again and again). But mostly this is probably the most unsavory part of the web when it comes to this issue of privacy and security. Quite simply, once I start using a site, especially if I am logged in, then the ability for that site to see and store my internet traversal history is just too easy. The accountability for this is nowhere to be seen. Even the most trustworthy of sites are in need of improvement along these lines. When I visit nytimes.com (just an example of an incredibly reputable site) I am visiting dozens and dozens of other web sites just on the home page. Below you can see a portion of the Web Page Privacy view from Internet Explorer showing all the URLs that compose a page. This is quite a surprise to most people who think that the links might go to a few photos or to some other servers for code or features. These are not subdomains of nytimes.com but whole other sites (honestly, do I really trust the domain ru4.com, which by the way resolves to “Perfect Privacy Incorporated” but has no home page or corporate information page). What are the privacy policies of these sites? What information do they get? Do they combine this information across their customers? It isn’t that they do this, it is that as a consumer I have no knowledge and as a site I visit the Times offers no transparency. This to me feels like a big challenge. I don’t mind the Times having my browsing history for the Times, not at all especially if I am logged on. I do mind all these companies with mysterious URLs following me around. When a company has my transaction history and uses it to deliver services it does not send that history to others, it has others send information to them to use the history. Why can’t sites implement ads and analytics in this manner? You can see the lack of accountability in the terms of service, as shown for the nytimes.com site below.

Solutions are on the way

I believe there are deep concerns that the mobile internet will devolve into the desktop internet and we will lose the clean slate we currently have. This would be a shame because we need ad-supported services on mobile as well. We know the current experience of using a browser on mobile is racing towards the desktop—ironically because the browsers are getting better with video, script and runtime support and more.

The recent announcements by Facebook and partners show how innovation can happen. By providing a mechanism for ad-supported content to appear within a Facebook app experience natively and in a format that does not (necessarily) support many of the bad practices of the desktop web my view is that advertising can be more natural and at the same time relevant. My hope is that the runtime and/or the policies do not support the arbitrary nature of the desktop web and the experience does improve dramatically and stay that way for a while.

While all of this is taking place in the consumer world, the business computing landscape is being altered by the encroachment of consumer services. The natural reaction of the enterprise is to disable or turn off this access, which many believe is a losing strategy. In this context the biggest concern I would bring is the notion that the business internet will live along side the consumer internet and all will be good. As a consumer when I use my mobile device for work and personal, I want that conflation to exist in the service data I use. If I buy books for my own personal interest but use them for work or even get reimbursed by work, then I want my Amazon profile to have that. If I use Maps to navigate to partners or customers I don’t wan to sign on and sign off or use a different Maps instance, but want to train a single instance. If I use a productivity tool for home and work, I want the usage and quality data to flow to the service so that the product gets better for how I use it. In all cases, the unified view of “me” makes everything I use better and that makes me a better employee using tools. I’d hate for IT to see privacy as another thing to enforce by degrading my experience.

¶ Ultimately, the web will continue to evolve and free services will continue to grow. That is super important to the future for the next 3 billion internet users. There will always be a distribution of views of how much information should be saved and what services will be valued. We should no judge each other on that any more than we can expect every service to cater to ever perspective on this topic. For a moment, we can look at the challenges we’re discussing and see the engineering and product development work that can be done. I believe we can collectively improve the current situation if we take steps to design new products and services that meet the needs of all parties.

# # # # #

Why Sony’s Breach Matters

This past year has seen more wide-spread, massive-scale, and damaging computer system breaches than any time in history. The Sony breach is just the latest—not the first or most creative or even the most destructive computer system breach. It matters because it is a defining moment and turning point to significant and disruptive changes to enterprise and business computing.

This past year has seen more wide-spread, massive-scale, and damaging computer system breaches than any time in history. The Sony breach is just the latest—not the first or most creative or even the most destructive computer system breach. It matters because it is a defining moment and turning point to significant and disruptive changes to enterprise and business computing.

The dramatic nature of today’s breaches impacts the enterprise computing infrastructure at both the endpoint and server infrastructure points. This is a good news and bad news situation.

The bad news is that we have likely reached the limits as to how much the existing infrastructure can be protected. One should not dismiss the Sony breach because of their simplistic security architecture (a file Personal passwords.xls with passwords in it is entertaining but not the real issue). The bad news continues with the reality of the FBI assertion of the role of a nation state in the attack or at the very least a level of sophistication that exceeded that of a multi-national corporation.

The good news is that several billion people are already actively using cloud services and mobile devices. With these new approaches to computing, we have new mechanisms for security and the next generation of enterprise computing. Unlike previous transitions, we already have the next generation handy and a cleaner start available. It is important to consider that no one was “trained” on using a smartphone—no courses, no videos, no tutorials. People are just using phones and tablets to do work. That’s a strong foundation.

In order to better understand why this breach and this moment in time is so important, I think it is worth taking a trip through some personal history of breaches and reactions. This provides context as to why today we are at a moment of disruption.

Security tipping points in the past

All of us today are familiar with the patchwork of a security architecture that we experience on a daily basis. From multiple passwords, firewalls, VPN, anti-virus software, admin permissions, inability to install software, and more we experience the speed-bumps put in place to thwart future attacks through some vector. To put things in context, it seemed worthwhile to talk about a couple of these speed-bumps. With this context we can then see why we’ve reached a defining moment.

For anyone less inclined to tech or details: below, I describe three technologies that were–each at its own moment in time–considered crucial by a healthy population of business users: MS-DOS TSRs, Word macros, and Outlook automation. The context around them changed over time, driving technology changes–like the speed bumps I list above that previously would have been dismissed as too disruptive.

Starting as a programmer at Microsoft in 1989 meant I was entering a world of MS-DOS (Windows 3.0 hadn’t shipped and everyone was mostly focused on OS/2). If one was a University hire into the Apps group (yes we called it that) you spent the summer in “Apps Development College” as a training program. I loved it. One thing I had to do though was learn all about viruses.

You have to keep in mind that back then most PCs weren’t connected to each other by networking, even in the workplace. The way you got a virus was by someone giving you a program via floppy (or downloading via 300b from a BBS) that was infected. Viruses on DOS were primarily implemented using a perfectly legitimate programming technique called “Terminate and Stay Resident” program, or TSR. TSRs provided many useful tools to the DOS environment. My favorite was Borland Sidekick was I had spent summers installing on all the first-time PCs at the Cold War defense contractor where I spent my summers. Unfortunately, a TSR virus once installed could trap keystrokes or interfere with screen or disk I/O.

I was struck at the time how a relatively useful and official operating system function could be used to do bad things. So we spent a couple of weeks looking at bad TSRs and how they worked. I loved Sidekick and so did millions. But the cost of having this gaping TSR hole was too high. With Windows (protect mode) and OS/2 TSRs were no longer allowed. It turned out to cause quite an uproar as many people had come to rely on TSRs for things like dialing their phone (really), recording notes, calendaring, and more. My lesson was that the pain and challenges caused were worse than breaking the workflow, even if that was all 20M people using business PCs at the time.

With the advent of Windows and email, businesses had a good run of both improved productivity and a world pretty much free of viruses. With Windows more and more businesses had begun to deploy Microsoft Word as well as to connect employees with email. Emailing documents around came to replace floppy disks.

Then in late 1996, seemingly all at once everyone started opening Word documents to a mysterious alert like the one below.

This annoying but benign development was actually a virus. The Word Concept virus (technically a worm, which at the time was a big debate) was spreading wildly. It attached itself to an incredibly useful feature of Word called the AutoOpen macro. Basically Word had a snazzy macro language that could do anything automatically that you could do in Word just sitting in front typing (more on this later). AutoOpen allowed these macros to run as soon as you opened a document. You’d receive a document with Concept code in AutoOpen and upon opening the document it would infect the default (and incredibly useful) template Normal.dot and then from then on every document you opened or created was subsequently infected. When you mailed a document or placed it on a file server, everyone opening that document would become infected the same way. This mechanism would become very useful for future viruses.

Looking at this on the team we were rather consternated. Here was a core business use case. For example, AutoOpen would trigger all sorts of business processes such as creating a standard document with the right formats and metadata or checking for certain conditions in a document management system. These capabilities were key to Word winning in the marketplace. Yet clearly something had to be done.

We debated just removing AutoOpen but settled on beginning a long path towards a combination of warning messages and trust levels for Macros to maintain business processes and competitive advantages. One could argue with that choice but the utility was real and alternatives looked really bad. This lesson would come into play in a short time.

The problem we had was that these code changes needed to be deployed. There was no auto update and most companies were not yet on the internet. We issued the patch which you could order on CD or download from an FTP site. We remanufactured the product and released a “point release” and so on (all these details are easily searched and the exact specifics are not important). The damage was done and for a long time “Concept removal” was itself a cottage industry.

Fast forward a couple of years and one weekend in 1999 I was at home and my phone rang (kids, that is the strange device connected to the wall that your parents have). I picked up my AT&T Cordless phone like Jerry used to have and on the other end of the phone was a reporter. She got my number from a PR contact who she woke up. Hyperventilating, all I could make out was that she was asking me about “Melissa”. I didn’t know a Melissa and was pretty confused. I couldn’t check my email because I only had one phone line (kids, ask your parents about that problem). I hung up the phone and promised to call back, which I did.

I connected to work and downloaded my email. Upon doing so I became not only an observer but a participant in this fiasco. My inbox was filled with messages from friends with the subject line “Here is the document you asked for…don’t show anyone else :)”. Every friend from high school and college as well as Microsoft had sent me this same mail. Welcome to the world of the Melissa virus.

This virus was a document that took advantage of a number of important business capabilities in Word and Outlook. Upon opening the attached document the first thing it managed to do was manage turn off Word’s new security setting that was previously added when protecting against Concept. Long story. Of course it didn’t really matter because vast numbers of IT Pros had already disabled this feature (disabling it was possible as part of the feature) in order to keep line of business systems working. A lot of lessons there that inform the next set of choices.

In addition, the Macro in that attachment then used the incredibly useful Outlook extensibility capabilities known as the VBA object model to enumerate your address book and automatically send mail to the first 50 contacts. I know to most of you the idea that this behavior being useful is akin to lighting up a cigar in the middle of a pitch meeting, but believe it or not this capability was exactly what businesses wanted. With Outlook’s extensibility we gained all sorts of mini-CRM systems, time organizers, email management, and more. Whole books were written about these features.

Once again we worked on a weekend trying to figure out how to tradeoff functionality that not only was useful but was baked into how businesses worked. We valued compatibility and our commitment to customers immensely but at the same time this was causing real damage.

The next day was Monday and the headline on USA Today was about how this virus had spread to estimates of 20% of all PCs and was going to cost billions of dollars to address (I can’t find the actual headline but this will do). I don’t know about you, but waking up feeling like I caused something like that (taking ownership and accountability as managers do) was very difficult. But it also made the next choices more reasonable.

We immediately architected and implemented a solution (I say we—I mean literally the whole Outlook team of about 125 engineers focused on this). We introduced the Outlook E-mail Security Update. This update essentially turned off the Outlook object model; would no longer open a vast majority of attachment types at all; and would always prompt for all attachments. We would also update all the apps to harden the macro security work. These changes were Draconian and unprecedented.

Thinking back to the uproar over breaking Sidekick in Windows 3, this uproar was unprecedented. Enterprise customers were on the phone immediately. We were doing white papers. We were working with third parties who built and thrived on Outlook extensibility. We were arming consultants to rebuild workflows and add-ins. While we might have “caused” billions in damage with our oversight (in hindsight) it seemed like were doing more damage. Was the cure worse than the disease?

Prevent, rather than cure?

Fast forward through Slammer, Blaster, ILOVEYOU, and on and on. Continue through internet zone, view only mode for attachments, Windows XP SP2 and more. The pattern is clear. We had well-intentioned capabilities that when strung together in novel ways went from enterprise asset to global liability with catastrophic side effects.

Each step in the process above resulted in another speed-bump or diversion. Through the rise of the internet and the wide spread use of the massively more secure NT OS kernel, vast improvements have been made to computing. But the bad actors are just bad actors. They aren’t going away. They adapt. Now they are supported by nation states or global criminal operations. Whether it is for terror, political gain or financial gain, there is a great deal to be gained. Today’s critical infrastructure is powered by systems that have major security challenges. Trillions of dollars of infrastructure is out there and there’s risk in many ways.

My personal view is that there is no longer an ability to add more speed-bumps and even if there was it would not address the changing environment. The road is covered with bumps and cones, but it is still there. The modern enterprise PC and Server infrastructures have been infiltrated with tools, processes, and settings to reduce the risk in today’s environment. Unfortunately in the process they have become so complex and hard to manage that few can really know these systems. Those using these systems are rapidly moving to phones and tablets just to avoid the complexity, unpredictability, and performance challenges faced in even basic work.

That is why we are at a defining moment.

What is wrong with the approach or architecture?

One could make a list a mile long of the specific issues faced with computing today. One could debate whether System A is more or less susceptible than System B. The reality is whether you’re talking Windows, OS X, Linux on desktop or client, they are for all practical purposes equivalent: an Intel-based OS architected in the 1980’s and with capabilities packaged at the user level for that era.

It is entirely possible to configure an environment that is as secure as possible. The question is really would it work like what you had hoped and would it be maintainable in the face of routine computing tasks by average people. I proudly say I was never infected, except for Melissa and that time I used WiFi in China and that USB stick and so on. That is the challenge.

In the broadest sense, there are three core challenges with this architecture which includes not just the OS, but the hardware, peripherals, and apps across the platform. As any security expert will tell you, a system is only secure as the weakest link.

Surface area of knobs and dials for end-users or IT. For 20 years, software was defined by how it could be broadly tweaked, deeply customized, or personalized at every level. The original TSRs were catching the most basic of keystroke (ALT keys) and providing much desired capabilities. The model for development was such that even when adding new security features, most every protection could be turned off (like Macro security). Those that think this is about clients, should consider what a typical enterprise server or app is engineered to do The majority of engineering effort in most enterprise server OS and apps goes into ways to customize or hook the app with custom code or unique configurations. Even the basics of logging on to a PC are all about changing the behavior of a PC with an execution engine, under the guise of security. The very nature of managing a server or end point is about turning knobs and dials. What ports to open? What apps to run? What permissions? Firewall rules? Protocols? And on and on. This surface area, much designed to optimize and create business value, is also surface area for bad actors. It is not any one thing, but the way a series of extensions can be strung together for ill effect. Today’s surface area across the entire architectural stack is immense and well-beyond any scope or capability for audit, management, or even inventory. Certainly no single security engineer can navigate it effectively.

Risk of execution engines. The history of computing is one of placing execution engines inside every program. Macro languages, runtimes, and more—execution engine on top of programs/execution engines. Macros or custom “code” defines the generation. Apps all had the ability to call custom code and to tap directly into native OS services. Having some sort of execution engine and ability to communicate across running programs was not just a feature but a business and competitive necessity. All of this was implemented at the lowest and most flexible, level. Few would have thought that providing such a valuable service, one in use and deployed by so many, would prove to be used for such negative purposes. Today’s platforms have an almost uncountable number of execution engines. In fact, many tools put in place to address security are themselves engines and those too have been targeted (anti-virus, router front ends, and more have all been recently the target of one of many steps in exploits). Today’s mobile apps can’t even make it through the app store approval process with an execution engine. See Steve Jobs Thoughts on Flash.

Vector of social. Technology can only go so far. As with everything, there’s always a solid role for humans to make mistakes or to be tricked into making mistakes. Who wouldn’t open a document that says “Don’t open”? With a hundred passwords, who wouldn’t write them down somewhere? Who wouldn’t open an email from a close college friend? Who wants the inconvenience of using SMS to sign on to a service? Why wouldn’t you use the USB memory stick given to you at a Global Summit of world leaders or connect to the WiFi at an international business class hotel? There are many things where taking humans out of the equation is going to make the world safer and better (cars, planes, manufacturing) to free up resources for other endeavors. Using computing to communicate, collaborate, and create, however, is not on a path to be human-free.

There are other ways to describe the current state of challenges and certainly the list of potential mitigations is ever-growing. When I think of the experience over the past 20 years of escalations, my view is that these are the fundamental challenges to the platform. More speed-bumps will do nothing to help.

Why are we in much better shape?

Well if you made it this far you probably think I painted a rather dystopian view of computing. In a sense I am just thinking back to that weekend phone call about my new friend Melissa. I can empathize with those professionals at Sony, Target, Home Depot, Neiman Marcus, and the untold others who have spent weekends on breaches. I can also empathize with the changes that are about to take place.

It is a good idea to go through and put in more speed bumps and triple check that your IT house is in order. It is unfortunate that most IT professionals will be doing so this holiday season. That is the job and work that needs to be done. This is a short term salve.

When the dust settles we need a new approach. We need the equivalent of breaking a bunch of existing solutions in order to get to a better place. If there’s one lesson from the experiences portrayed in this post, it is that no matter how intense the disruption one creates it won’t go far enough and it will still cause an untold amount of pushback and discomfort from those that have real work to get done. Those in charge or with self-declared technical skill will ask for exceptions because they can be trusted or will act differently than the masses. It only takes one hole in a system and so exceptions are a mistake. I definitely have been wrong personally in that regard.

All is not lost however. We are on the verge of a new generation of computing that was designed from the ground up to be more secure, more robust, more manageable, more usable, and simply better. To be clear, this is absolutely positively not a new state of zero risk. We are simply moving the barriers to a new road. This new road will level the playing field and begin a new war with bad actors. That’s just how this goes. We can’t rid the world of bad actors but we can disrupt them for a while.

New OS and App architectures. Today’s modern operating systems designed for mobile running on ARM decidedly resets some of the most basic attack vectors. We can all bemoan app store (or app store approval) or app sandboxing. We can complain about “App would like access to your Photos”. These architectural changes are significant barriers to bad actors. One day you can open a maliciously crafted photo attachment and have a buffer overrun that then plants some code on a PC to do whatever it wants (simplified description). And then the next day that same flow on a modern mobile OS just doesn’t work. Sure lots of speed-bumps, code reviews, and more have been put in place but the same sequence keeps happening because 20 years and 100’s of millions of lines of code can’t get fixed, ever. A previous post detailed a great deal more about this topic.

Cloud services designed for API access of data. The cloud is so much more than hosting existing servers and server products. In fact, hosting an existing server app or OS is essentially a speed-bump and not a significant win for security. Moving existing servers to be VMs in a public or “private” cloud adds a complexity for you and a minimal bump for bad actors. Why is that? The challenge is all that extensibility and customizability is still there. Worse, those customers moving to a hosted world for their existing capabilities are asking to maintain parity. Modern cloud-native products designed from the ground up have a whole different view of extensibility and customization from the start. Rather than hooks and execution engines, the focus is on data and API customization. The surface area is much less from the very start. For some this might seem like too subtle a difference and certainly some will claim that moving to the cloud is a valid hardening step. For example, in a cloud environment you don’t have access to “all the files” for an organization by using easy drag and drop end-user tools from an end-point. My view is that now is a perfect time to reduce complexity rather than simply hide it by a level of indirection. This is enormously uncomfortable for IT that prided itself on a combination of excellent work and customization and configuration with a business need.

Cloud native companies and products. When engineers moved to writing Windows programs from DOS programs whole brain patterns needed to be rewired. This same thing is true when you move from client and server apps to mobile and cloud services. You simply do everything in a different way. This different way happens to be designed from the start with a whole different approach to security and isolation. This native view extends not just to how features are exposed but to how products are built of course. Developers don’t assume access to random files or OS hooks simply because those don’t exist. More importantly, the notion that a modern OS is all about extensibility or arbitrary code execution on the client or about customization at the implementation level are foreign to the modern engineer. Everyone has moved up the stack and as a result the surface area dramatically reduced and complexity removed. It is also a reality that the cloud companies are going to be security first in terms of everything they do and in their ability to hire and maintain the most sophisticated cyber security groups. With these companies, security is an existential quality of the whole company and that is felt by every single person in the entire company. I know this is a heretical statement, but when you look at the companies that have been breached these are some of the largest companies with the most sophisticated and expensive security teams in non-technology businesses. Will a major cloud vendor be breached? It is difficult to say that it won’t happen. The odds are so much more in favor of cloud-native providers than even the most excellent enterprise.

New authentication and infrastructure models. Imagine a world of ubiquitous two factor authentication and password changing verified by SMS to a device with location awareness and potentially biometrics and even simple PINs. That’s the default today, not some mechanism requiring a dongle, VPN, and a 10 minute logon script. Imagine a world where firewalls are crafted based on software that knows the reachability of apps and nodes and not on 10’s of thousands of rules managed by hand and essentially untouchable even during a breach. That’s where infrastructure is heading. This is the tip of the iceberg but things in this world of basic networking identity and infrastructure are being dramatically changed by software and cloud services—beyond just apps and servers.

Every major change in business computing that came about because of a major breach or disruption of services caused a difficult or even painful transition to a new normal. At each step business processes and workflow were broken. People complained. IT was squeezed. But after the disruption the work began to develop new approaches.

Today’s mobile world of apps and cloud services is already in place. It is not a plug-in substitute for what we have been using for 20 or more years but it is also better in so many ways. Collaboration, mobility, flexibility, ease of deployment and more are vastly improved. Sharing, formatting, emailing and more will change. It will be painful. With that challenge will come a renewed sense of control and opportunity. Like the 15 or so years from TSRs to Melissa, my bet is we will have a period of time free of bad actors, at least in the old ways, for enterprises that make the changes needed.

—Steven Sinofsky (@stevesi)

# # # # #